Why Jiakaobaodian Chooses APISIX Ingress Controller

Qiang Zeng

September 29, 2022

With the prevalence of Kubernetes (K8s for short), we have more options other than the official default NGINX Ingress Controller to choose from, and Apache APISIX Ingress Controller has also become a popular choice for many companies. More and more companies are gradually replacing their Ingress Controller with APISIX Ingress Controller.

Background

Wuhan Mucang Technology Co., Ltd was founded in 2011, and its main products are dozens of car applications such as Jiakaobaodian. Since it was established more than 10 years ago, the company has followed the trend of technology and started to embrace cloud-native in 2019, and various projects in the company have embarked on Kubernetes.

But at that time, as K8s had just emerged, there were few traffic entry portals to choose from. Therefore, we used the default Ingress Controller – NGINX Ingress Controller – for nearly 4 years. However, during that period, it had become increasingly clear that NGINX Ingress Controller could no longer meet our needs, forcing us to make a new selection. After comparing the mainstream Ingress Controllers, we decided to use Apache APISIX as the company's entry gateway.

The Problem with NGINX Ingress Controller

The services in the company use HTTP protocol and TCP protocol, and only operation and maintenance engineers know exactly how to configure the TCP proxy through NGINX Ingress Controller. However, the manpower of operation and maintenance is limited. It would be best if we hand over some of the basic NGINX operations and configurations to the development team, so as to save the cost of operation and maintenance.

Apart from all that, we also encountered the following problems:

- Some configuration changes require reloading;

- TCP proxy has a high cost of use, and can not cover the scenarios for all kinds of traffic;

- Routing or traffic operations can only be defined by

annotations, and we cannot semantically define and reuse configurations; - Rewriting, circuit breaking, request transferring and other operations are implemented through

annotations; - Lack of management platform, and operation and maintenance staff need to operate through YAML, which is prone to errors;

- Poor portability;

- Not conducive to container platform integration.

For these reasons, we decided to replace our former Ingress Controller with a new one.

Why APISIX Ingress Controller

We investigated Apache APISIX and other Ingress Controllers, comparing them in terms of performance, ease of use, scalability, and platform integration, and finally selected APISIX Ingress Controller as the K8s traffic entry gateway for the following reasons.

- Good scalability;

- Support configuration hot-loading;

- High performance;

- Lots of plugins;

- Support CRD for integration with the self-developed container platform.

Overall Architecture

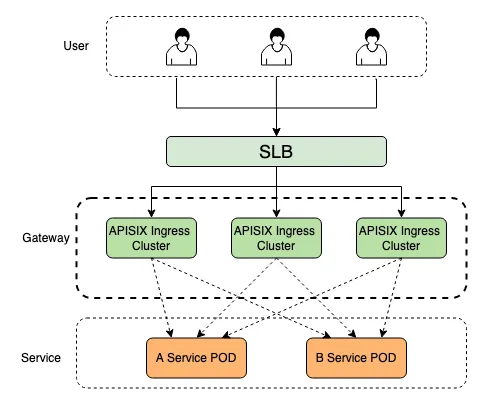

That being said, let's take a look at the entire gateway architecture. In an actual business scenario, users will first forward the traffic through SLB (Server Load Balancer), and when the traffic enters K8s, each service will be invoked through the APISIX cluster. We also implement many common functions (canary release, flow control, API circuit breaking, traffic security control, request/response traffic control, etc.) on the gateway side in order to unify the management of services, reduce the complexity of the business and save costs.

Deployment Method

Our business is deployed on different cloud platforms (Huawei Cloud, Tencent Cloud, Alibaba Cloud), and we can quickly bring our business online through Helm Chart, which is supported by APISIX Ingress Controller. When using APISIX Ingress Controller, if we find features that can be improved, we will also submit PRs to help the community to update and iterate the features. In the actual deployment process, we customized some configurations according to our business characteristics, such as:

- Create NameSpace through K8s, deploy APISIX and APISIX Ingress Controller to different NameSpace, and segregate traffic according to the product lines and service importance;

- Optimize the Linux TCP kernel parameters in APISIX

initContainer; - As we need to segregate traffic in terms of product lines and service importance, the ClassName information of K8s needs to be configured.

By isolating the traffic by different product lines and importance, you can minimize the loss caused by software faults.

Integrating with Self-developed Container Platforms Using CRD

APISIX Ingress Controller currently supports many CRD resources, so we can use CRD resources to integrate APISIX Ingress Controller with our own container platform. However, since APISIX does not provide Java Client, we need to use the code generation tool provided by K8s to generate the Model and use CustomObjectApi to manage the CRD. The ApisixRoute objects are associated with container platform applications and structured with core objects, allowing both operations staff and project managers to operate in the container platform.

Application Scenarios

Authentication

Before using Apache APISIX, we implemented authentication based on the Istio gateway, and after migrating to Apache APISIX, we chose to use the forward-auth plugin, which cleverly moves the authentication and authorization logic to an external auth service. The user's request is forwarded to the auth service through the gateway, and when the auth service responds with a non-20x status, the original request is blocked and the result is replaced. In this way, it is possible to return a custom error report or redirect the user to the authentication page if the authentication fails.

When a client sends a request to an application, it is first processed by the forward-auth plugin of APISIX, and the request is forwarded to an external authentication service via forward-auth, and the result is finally returned to the APISIX gateway. If the authentication is successful, the client can request the service normally. If the authentication fails, an error code is returned.

Flow Control

Due to the company's business characteristics, there are five or six months of peak traffic every year. Once there are too many requests, we need to manually expand the capacity, but due to the backlog of requests, the service may not be able to start after the expansion, which will lead to an avalanche breakdown of the whole link, so we need to limit the flow and speed of the cluster.

Therefore, we use APISIX's limit-conn, limit-req, and limit-count plugins to limit requests and prevent avalanche breakdowns. It is easier to limit the flow and speed by modifying plugins, and because of the APISIX hot-loading mechanism, there is no need to restart the plugins when configuring them, so they can take effect immediately. By controlling the traffic, it can also stop some malicious attacks and protect the system security. We have also implemented HPA (Horizontal Pod Autoscaler) in the K8s platform, which automatically scales up and down once the traffic amount is too large or too small, with APISIX flow and rate limiting plugins to stop massive breakdowns.

Observability

In terms of observability, we currently monitor traffic across the platform via SkyWalking. The APISIX SkyWalking plugin allows a direct interface with the existing SkyWalking platform, and the SkyWalkiing UI makes it easy to see the link nodes that are consuming performance. Moreover, since the monitoring points are located on the gateway side, hence closer to the user, it is easier to check the time-consuming points.

With the kafka-logger plugin, the access and error logs generated by APISIX can be written directly to the Apache Kafka cluster. With this advantage, the APISIX cluster can scale statelessly and horizontally without the need to format disks, rotate logs or perform other operations. If the logs are stored locally, we need to do some additional operations and cannot achieve fast scaling. By sending the logs to Apache Kafka cluster, we can also analyze the logs in real time, and improve and optimize the system based on the analysis results.

Conclusion

Since APISIX Ingress Controller has just been launched, there are not that many application scenarios yet. We will continue to dig deeper into the application scenarios and bring more usage examples to the community.

More and more teams are using Apache APISIX Ingress Controller in the production environment. If you are also using APISIX Ingress Controller, we encourage you to share your use cases in the community.