How Does NGINX Reload Work? Why Is NGINX Not Hot-Reloading?

Wei Liu

September 30, 2022

I recently noticed a post on Reddit which said "Why NGINX doesn’t support hot reloading?". Weirdly, NGINX, the world’s largest web server, doesn’t support hot reloading? Does it mean we all use nginx -s reload incorrectly? With this question, let’s check how NGINX reload works.

What is NGINX

NGINX is a cross-platform open-source web server developed in C language. According to statistics, among the top 1000 highest traffic websites, over 40% of them are using NGINX to handle the enormous requests.

Why is NGINX so popular

What advantages does NGINX have to beat the other web servers and always keep a high utilization rate?

The main reason is that NGINX is designed for handling high concurrency issues. Therefore, NGINX could provide a stable and efficient service while handling enormous concurrent requests. Moreover, compared to competitors of the same age, such as Apache and Tomcat, NGINX has many extraordinary designs like advanced event-driven architecture, a fully asynchronous mechanism to handle network IO, and an extremely efficient memory management system. These remarkable designs help NGINX entirely use servers’ hardware resources and make NGINX the web server's synonyms.

Apart from the above, there are other reasons like:

- Highly modular design makes NGINX own enormous feature-rich official and extended third-party modules.

- The freest BSD License makes developers willingly contribute to NGINX.

- The support of hot reloading enables NGINX to provide 24/7 services.

Among the above reasons, hot reloading is our main topic today.

What does Hot Reload do

What is our expected hot reloading? First, I think client-side users shouldn’t be aware of the server reloading. Second, servers or upstream services should achieve dynamic loading and handle all user requests successfully without downtime.

Under what circumstances do we need hot reloading? In the Cloud Native era, microservices have become so popular that more and more application scenarios require frequent server-side modifications. These modifications, such as reverse proxy of domain online/offline, upstream address changes, and IP allowlist/blocklist change, are related to hot reloading.

So how does NGINX achieve hot reloading?

The Principle of NGINX Hot Reloading

When we execute the hot reload command nginx -s reload, it sends a HUP signal to the NGINX’s master process. When the master process receives the HUP signal, it will open listening ports sequentially and start a new worker process. Therefore, two worker processes (old & new) will exist simultaneously. After the new worker process enters, the master process will send a QUIT signal to the old worker process to gracefully shut it down. When the old worker receives the QUIT signal, it will first close the listening handler. Now, all new connections will only enter the new worker process, and the server will shut down the old process once it processes all remaining connections.

In theory, could NGINX’s hot reloading perfectly meet our requirements? Unfortunately, the answer is no. So, what kind of downsides does NGINX’s hot reloading have?

NGINX reload causes downtime

-

Too frequent hot reloading would make connections unstable and lose business data.

When NGINX executes the reload command, the old worker process will keep processing the existing connections and automatically disconnect once it processes all remaining requests. However, if the client hasn’t processed all requests, they will lose business data of the remaining requests forever. Of course, this would raise client-side users’ attention.

-

In some circumstances, the recycling time of the old worker process takes so long that it affects regular business.

For example, when we proxy WebSocket protocol, we can’t know whether a request has been processed because NGINX doesn’t parse the header frame. So even though the worker process receives the quit command from the master process, it can’t exit until these connections raise exceptions, time out, or disconnect.

Here is another example, when NGINX performs as the reverse proxy for TCP and UDP, it can’t know how often a request is being requested before it finally gets shut down.

Therefore, the old worker process usually takes a long time, especially in industries like live streaming, media, and speech recognition. Sometimes, the recycling time of the old worker process could reach half an hour or even longer. Meanwhile, if users frequently reload the server, it will create many shutting down processes and finally lead to NGINX OOM, which could seriously affect the business.

# always exist in old worker process:

nobody 6246 6241 0 10:51 ? 00:00:00 nginx: worker process

nobody 6247 6241 0 10:51 ? 00:00:00 nginx: worker process

nobody 6247 6241 0 10:51 ? 00:00:00 nginx: worker process

nobody 6248 6241 0 10:51 ? 00:00:00 nginx: worker process

nobody 6249 6241 0 10:51 ? 00:00:00 nginx: worker process

nobody 7995 10419 0 10:30 ? 00:20:37 nginx: worker process is shutting down <= here

nobody 7995 10419 0 10:30 ? 00:20:37 nginx: worker process is shutting down

nobody 7996 10419 0 10:30 ? 00:20:37 nginx: worker process is shutting down

In summary, we could achieve hot reloading by executing nginx -s reload, which was enough in the past. However, microservices and Cloud Native’s rapid development make this solution no longer meet users’ requirements.

If your business’s update frequency is weekly or daily, then this NGINX reloading could still satisfy your needs. However, what if the update frequency turns hourly or per minute? For example, suppose you have 100 NGINX servers, and it reloads once an hour, then it would need to reload 2400 times per day; If the server reloads per minute, then it will need to reload 8,640,000 times per day, which is unacceptable.

We need a solution without process switching, and it could also achieve immediate content updates.

Hot Reloading That Takes Effects Immediately In Memory

When Apache APISIX was born, it was designed to resolve the NGINX hot reloading issue. APISIX is developed based on NGINX and Lua’s tech stacks, and it is a cloud-native, high-performance, fully dynamic microservice API gateway that uses etcd as its core configuration center. There is no need to reboot the server to reload the new server config, meaning any upstream services, routes, or plugin changes will not require a server reboot. But how could APISIX eliminate the limitations of NGINX to achieve perfect hot reloading based on the fact that APISIX is developed upon the NGINX tech stack?

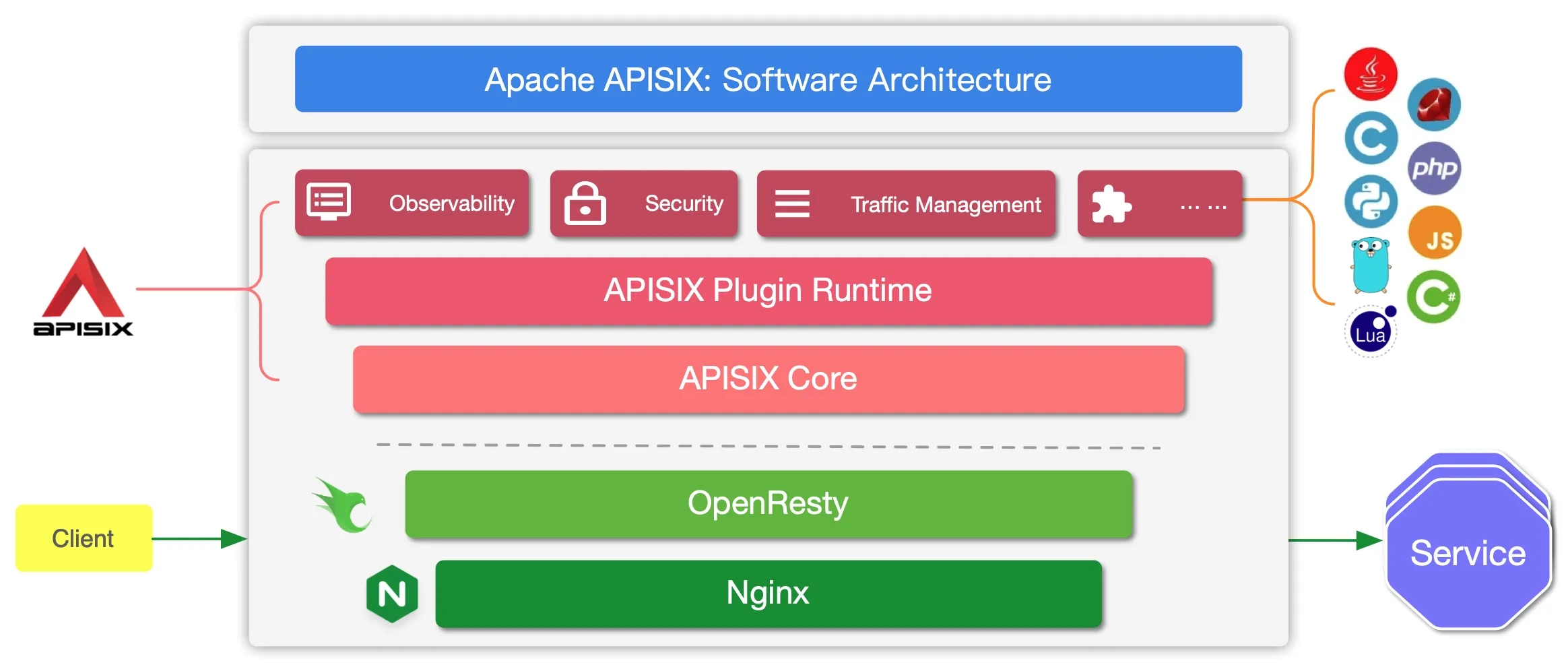

First, let’s see the software architecture of Apache APISIX:

APISIX could achieve the perfect hot reloading since it puts all configurations into the APISIX Core and Plugin Runtime so that they could use the dynamic assignments. For example, when NGINX needs to configure parameters inside configuration files, each modification would only take effect after reload. To dynamically configure routes, Apache APISIX only configures one specific single server with only one location. We have to use this location as the main entry so that all requests would first go through it, and then APISIX Core would dynamically assign specific upstreams for them. Apache APISIX’s route module could support adding/removing, modifying, and deleting routes while the server runs. In other words, it could achieve dynamic reloading. None of these changes would bring users’ attention and affect regular business.

Now, let’s introduce a classic scenario; for example, if we want to add a reverse proxy for a new domain, we only need to create an upstream in APISIX and add a new route. NGINX doesn’t need to reboot during this process. Here is another example for the plugin’s system: APISIX could use an IP-restriction plugin to achieve the IP allowlist/blocklist feature. All these feature updates are dynamic and don’t need the server reboot. Thanks to etcd, the configuration strategy could achieve instant updates using add-ons, and all configs could take effect immediately and provide the best user experience.

Conclusion

NGINX hot reloading would have one old and one new worker process under some circumstances, which causes additional resource waste. Also, too frequent hot reloading could cause a slight chance of total business data loss. Under the background of Cloud Native and microservices, the service updates become more and more frequent, and the strategy to manage API also varies, which leads to new requirements for hot reloading.

NGINX’s hot reloading no longer satisfies the business requirements. It is time to switch to Apache APISIX, an API gateway with a more advanced hot reloading strategy in the Cloud Native era. In addition, after switching to APISIX, users could have dynamic and uniform management of API services, and it could significantly improve management efficiency.