How Does APISIX Ingress Support Thousands of Pod Replicas?

Xin Rong

October 21, 2022

1,000+ Kubernetes Pods

Pods are the smallest deployable objects in Kubernetes. We use a pod to run a single instance of an application, so we only assign limited resources to the pod because of scalability. However, if we encounter high-traffic scenarios, we will use horizontal scaling to handle them.

For example, for online retailers, Black Friday will drive a rapid increase in traffic. To handle this scenario, we have to autoscale the services to handle more traffic. We would deploy more replications based on the autoscaling strategy, resulting in more Pods.

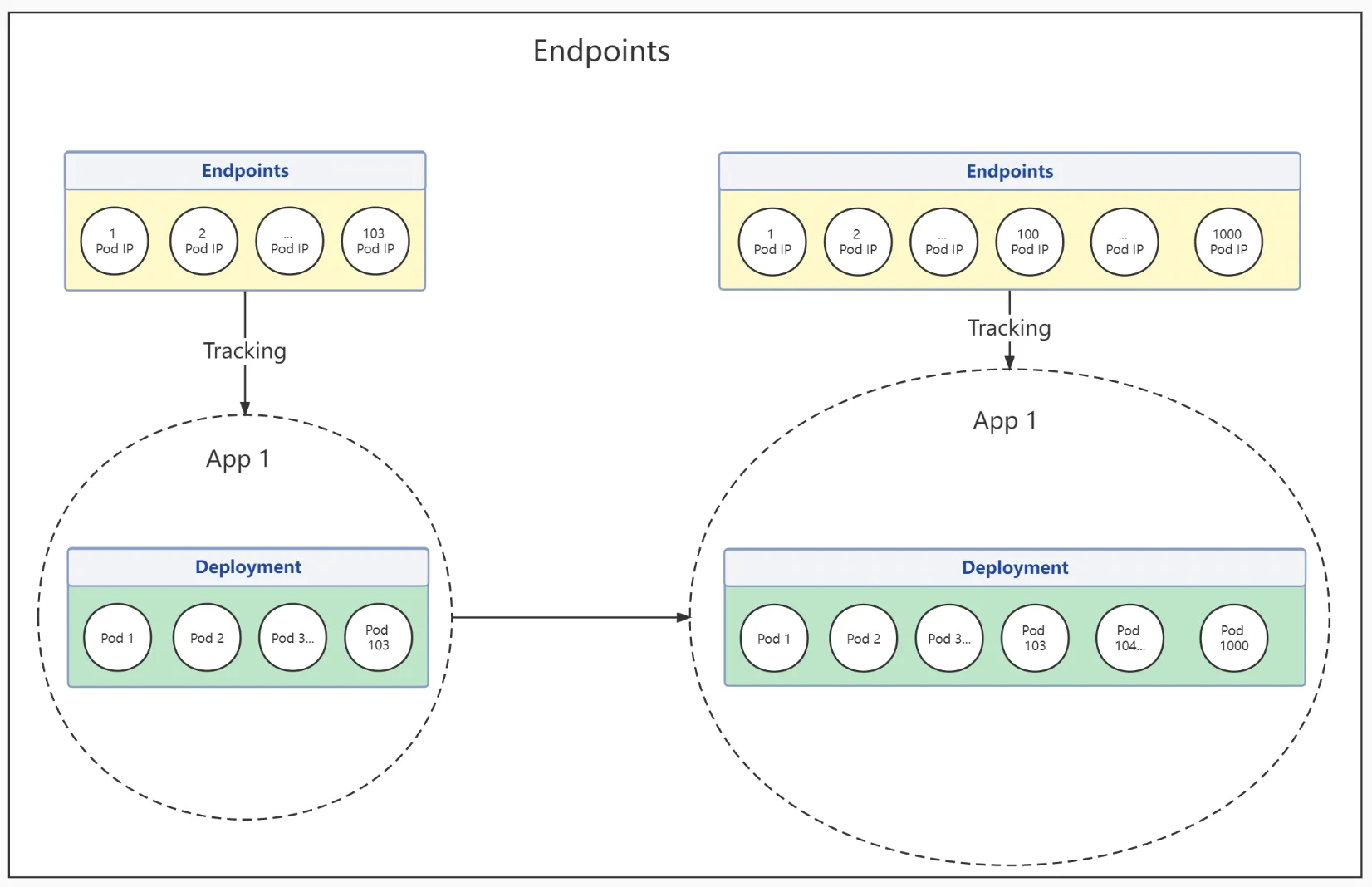

Each Pod has a dynamic IP address. Endpoints API has provided a straightforward way of tracking network endpoints in Kubernetes so that we can achieve load balancing by timely monitoring the changes of Pod IP. However, as Kubernetes clusters and Services have grown to handle more traffic to more Pods, for example, the Black Friday scenario mentioned above, the number of Pods keeps increasing, and Endpoints API becomes larger. Consequently, Endpoints API's limitations have become more visible, and it has even become the performance bottleneck.

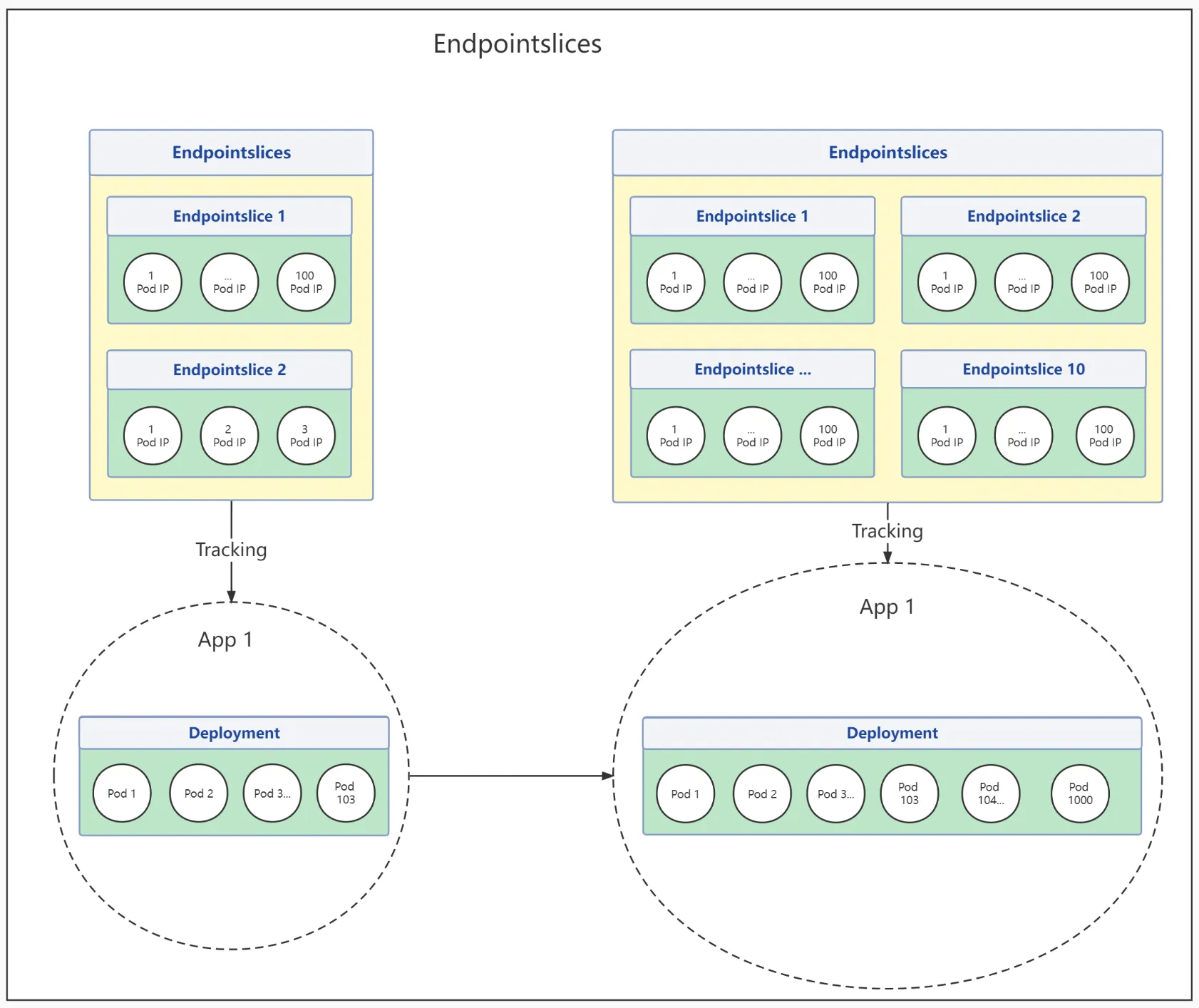

To resolve the limitation issue of Endpoints API, Kubernetes starts supporting the EndpointSlice API in the version v1.21. The EndpointSlice API helps resolve the performance issue of handling enormous network endpoints in the Endpoints API and has excellent scalability and extensibility.

We can directly find out the differences between them from the diagram below:

- The changes of Endpoints during traffic spike

- The changes of Endpointslices during traffic spike

In Kubernetes, how do applications communicate with each other? What are the specific differences between Endpoints and EndpointSlice? What is the relationship between Pod and Endpoints/EndpointSlice? How does APISIX support these features, and how to install and use them? We will focus on these questions in this article.

How to access applications in Kubernetes

Via Service

Each Pod has its unique IP address in Kubernetes. Usually, Service will build connections with Pod by using selector, provide the same DNS subdomain name, and achieve load balancing. In addition, applications inside the Kubernetes cluster can use DNS to communicate with each other.

When Service is created, Kubernetes will connect Service with an Endpoints resource. However, if Service hasn't specified any selectors, Kubernetes won't automatically create Endpoints for Service.

What is Endpoints, and what is the relationship with Pod

Endpoints is a resource object in Kubernetes, stored in etcd, and includes references to a set of Pod's access address that matches a Service. Therefore, each Service could only have one Endpoints resource. Endpoints resource will monitor Pod clusters and synchronously update once any Pod in Service changes.

- Deploy 3

httpbinreplications, and check Pod's status, including IP information

$ kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

httpbin-deployment-fdd7d8dfb-8sxxq 1/1 Running 0 49m 10.1.36.133 docker-desktop <none> <none>

httpbin-deployment-fdd7d8dfb-bjw99 1/1 Running 4 (5h39m ago) 23d 10.1.36.125 docker-desktop <none> <none>

httpbin-deployment-fdd7d8dfb-r5nf9 1/1 Running 0 49m 10.1.36.131 docker-desktop <none> <none>

- create

httpbinservice, and check Endpoints endpoints' condition

$ kubectl get endpoints httpbin

NAME ENDPOINTS AGE

httpbin 10.1.36.125:80,10.1.36.131:80,10.1.36.133:80 23d

From the above two examples, we could see that every single network endpoint of httpbin resource in Endpoints matches a Pod's IP address

Disadvantages of Endpoints

- Endpoints has storage limit, if any Endpoints resource has more than 1000 endpoints, then the Endpoints controller will truncate endpoints to 1000.

- A service could only have one Endpoints resource, which means the Endpoints resource needs to store IP addresses and other network information for every Pod that was backing the corresponding Service. As a result, the Endpoint API resource becomes huge, and it has to update when a single network endpoint in the resource changes. When the business needs frequent endpoints changes, a huge API resource will be sent to each other, which affects the performance of the Kubernetes components

What is Endpointslices

Endpointslices is a more scalable and extensible alternative to Endpoints and helps handle the performance issue caused by processing enormous network endpoints. It also helps provide an extensible platform for additional features such as topological routing. This feature is supported in Kubernetes v1.21.

The EndpointSlice API was designed to address this issue with an approach similar to sharding. Instead of tracking all Pod IPs for a Service with a single Endpoints resource, we split them into multiple smaller EndpointSlices.

By default, the control plane creates and manages EndpointSlices to have no more than 100 endpoints each. You can configure this with the --max-endpoints-per-slice kube-controller-manager flag, up to 1000.

Why do we need it

Consider a scenario

Assume there is a Service backed by 2000 Pods, which could end up with 1.0 MB Endpoints resource. In the production environment, if this Service has rolling updates or endpoint migrations, Endpoint resources will frequently update. Think about rolling updates will cause all Pods to be replaced due to the maximum size limit of any request in etcd. Kubernetes has set a maximum of 1000 endpoints limit to Endpoints. If there are more than 1000 endpoints, Endpoints resource won't have any references on additional network endpoints.

Suppose the Service needs multiple rolling updates due to some special needs; in that case, a huge API resource object will be transferred among Kubernetes components, which significantly affects the performance of Kubernetes components.

What if we use EndpointSlice

Suppose there is a Service backed by 2000 Pods, and we assign 100 endpoints to each Endpointslices in the configuration, then we will end up with 20 Endpointslices. Now when a Pod is added or removed, only one small EndpointSlice needs to be updated. Obviously, it is a remarkable improvement in scalability and network extensibility. Suppose every single Service has an autoscaling requirement. In that case, Service will deploy more Pods, and Endpoints resource will be frequently updated to handle the increasing traffic when there is a traffic spike, and the difference will become more noticeable. More importantly, now that all Pod IPs for a Service don't need to be stored in a single resource, we don't have to worry about the size limit for objects stored in etcd.

Conclusion of Endpoints VS EndpointSlice

Because EndpointSlice is supported since Kubernetes v1.21, all conclusions refer to Kubernetes v1.21.

Use cases of Endpoints:

- There is a need for autoscaling, but the number of Pods is relatively small, and resource transfers won't cause huge network traffic and extra handling needs.

- There is no need for autoscaling, and the number of Pods won't be huge. However, even though the number of Pods is fixed, the Service couldn't omit rolling updates and faults.

Use cases of EndpointSlice:

- There is a need for autoscaling, and the number of Pods is huge (hundreds of Pods)

- The number of Pods is enormous (hundreds of Pods) due to the maximum endpoints limit of Endpoints being 1000; any Pod that has more than 1000 endpoints has to use EndpointSlice.

Practice in APISIX Ingress

APISIX Ingress achieves load balancing and health checks by watching the changes of Endpoints or EndpointSlice resource. To support Kubernetes v1.16+, APISIX Ingress will use Endpoints during installation by default.

If your cluster's version is Kubernetes v1.21+, then you need to specify the flag watchEndpointSlice=true to support the EndpointSlice feature during APISIX Ingress installation.

Note: In the clusters with Kubernetes v1.21+, we recommend using Endpointslice feature, otherwise when the number of Pods exceeds

--max-endpoints-per-sliceflag's configuration value, the configuration would be lost due to APISIX Ingress is watching the Endpoints resource object.

Creating Service backed with 20 Pod replications

Configure httpbin application Service in Kubernetes, and create 20 Pod replications

- kubectl apply -f httpbin-deploy.yaml

# htppbin-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: httpbin-deployment

spec:

replicas: 20

selector:

matchLabels:

app: httpbin-deployment

strategy:

rollingUpdate:

maxSurge: 50%

maxUnavailable: 1

type: RollingUpdate

template:

metadata:

labels:

app: httpbin-deployment

spec:

terminationGracePeriodSeconds: 0

containers:

- livenessProbe:

failureThreshold: 3

initialDelaySeconds: 2

periodSeconds: 5

successThreshold: 1

tcpSocket:

port: 80

timeoutSeconds: 2

readinessProbe:

failureThreshold: 3

initialDelaySeconds: 2

periodSeconds: 5

successThreshold: 1

tcpSocket:

port: 80

timeoutSeconds: 2

image: "kennethreitz/httpbin:latest"

imagePullPolicy: IfNotPresent

name: httpbin-deployment

ports:

- containerPort: 80

name: "http"

protocol: "TCP"

---

apiVersion: v1

kind: Service

metadata:

name: httpbin

spec:

selector:

app: httpbin-deployment

ports:

- name: http

port: 80

protocol: TCP

targetPort: 80

type: ClusterIP

Proxy via APISIX Ingress

- Use Helm to install APISIX Ingress

Use --set ingress-controller.config.kubernetes.watchEndpointSlice=true to enable the support of EndpointSlice feature.

helm repo add apisix https://charts.apiseven.com

helm repo add bitnami https://charts.bitnami.com/bitnami

helm repo update

kubectl create ns ingress-apisix

helm install apisix apisix/apisix \

--set gateway.type=NodePort \

--set ingress-controller.enabled=true \

--namespace ingress-apisix \

--set ingress-controller.config.apisix.serviceNamespace=ingress-apisix \

--set ingress-controller.config.kubernetes.watchEndpointSlice=true

- Use CRD resource to proxy

Users could not notice the support of Endpoints and EndpointSlice features in APISIX Ingress, and their configurations are the same

apiVersion: apisix.apache.org/v2

kind: ApisixRoute

metadata:

name: httpbin-route

spec:

http:

- name: rule

match:

hosts:

- httpbin.org

paths:

- /get

backends:

- serviceName: httpbin

servicePort: 80

- By checking the APISIX Pod, we could see APISIX's upstream object's nodes field contains 20 Pods' IP addresses.

kubectl exec -it ${Pod for APISIX} -n ingress-apisix -- curl "http://127.0.0.1:9180/apisix/admin/upstreams" -H 'X-API-KEY: edd1c9f034335f136f87ad84b625c8f1'

{

"action": "get",

"count": 1,

"node": {

"key": "\/apisix\/upstreams",

"nodes": [

{

"value": {

"hash_on": "vars",

"desc": "Created by apisix-ingress-controller, DO NOT modify it manually",

"pass_host": "pass",

"nodes": [

{

"weight": 100,

"host": "10.1.36.100",

"priority": 0,

"port": 80

},

{

"weight": 100,

"host": "10.1.36.101",

"priority": 0,

"port": 80

},

{

"weight": 100,

"host": "10.1.36.102",

"priority": 0,

"port": 80

},

{

"weight": 100,

"host": "10.1.36.103",

"priority": 0,

"port": 80

},

{

"weight": 100,

"host": "10.1.36.104",

"priority": 0,

"port": 80

},

{

"weight": 100,

"host": "10.1.36.109",

"priority": 0,

"port": 80

},

{

"weight": 100,

"host": "10.1.36.92",

"priority": 0,

"port": 80

}

... // ignore 13 nodes below

// 10.1.36.118

// 10.1.36.115

// 10.1.36.116

// 10.1.36.106

// 10.1.36.113

// 10.1.36.111

// 10.1.36.108

// 10.1.36.114

// 10.1.36.107

// 10.1.36.110

// 10.1.36.105

// 10.1.36.112

// 10.1.36.117

],

"labels": {

"managed-by": "apisix-ingress-controller"

},

"type": "roundrobin",

"name": "default_httpbin_80",

"scheme": "http"

},

"key": "\/apisix\/upstreams\/5ce57b8e"

}

],

"dir": true

}

}

- Match with EndpointSlice's network endpoints

addressType: IPv4

apiVersion: discovery.k8s.io/v1

endpoints:

- addresses:

- 10.1.36.92

...

- addresses:

- 10.1.36.100

...

- addresses:

- 10.1.36.104

...

- addresses:

- 10.1.36.102

...

- addresses:

- 10.1.36.101

...

- addresses:

- 10.1.36.103

...

- addresses:

- 10.1.36.109

...

- addresses:

- 10.1.36.118

...

- addresses:

- 10.1.36.115

...

- addresses:

- 10.1.36.116

...

- addresses:

- 10.1.36.106

...

- addresses:

- 10.1.36.113

...

- addresses:

- 10.1.36.111

...

- addresses:

- 10.1.36.108

...

- addresses:

- 10.1.36.114

...

- addresses:

- 10.1.36.107

...

- addresses:

- 10.1.36.110

...

- addresses:

- 10.1.36.105

...

- addresses:

- 10.1.36.112

...

- addresses:

- 10.1.36.117

...

kind: EndpointSlice

metadata:

labels:

endpointslice.kubernetes.io/managed-by: endpointslice-controller.k8s.io

kubernetes.io/service-name: httpbin

name: httpbin-dkvtr

namespace: default

ports:

- name: http

port: 80

protocol: TCP

Conclusion

This article introduces scenarios where Kubernetes needs to deploy massive Pods and the problems we encounter. It also compares the differences between Endpoints and EndpointSlice, and introduces the way to enable EndpointSlice feature during APISIX Ingress installation. If your cluster's version is Kubernetes v1.21+, we recommend enabling EndpointSlice feature during APISIX Ingress installation. Therefore, it could avoid the loss of configuration, and we don't need to worry about --max-endpoints-per-slice flag's configuration value.